Tasks

Task 1: Meme Detection

The lack of consensus around what defines a meme (Shifman, 2013) led to different definitions, focusing on circulation (Dawkins, 2006; Davison, 2012), formal features (Milner, 2016), or content (Gal et al, 2016; Knobel and Lankshear, 2007).

For this dataset, manual coding focused both on formal aspects (such as layout, multimodality and manipulation) as well as content (e.g. ironic intent); the exponential increase in visual production, however, warrants an automated approach, which might be able to further tap into stable and generalizable aspects of memes, considering form, content and circulation.

For this dataset, manual coding focused both on formal aspects (such as layout, multimodality and manipulation) as well as content (e.g. ironic intent); the exponential increase in visual production, however, warrants an automated approach, which might be able to further tap into stable and generalizable aspects of memes, considering form, content and circulation.

Given the dataset minus the variable strictly related to memetic status, participants must provide a binary variable, differentiating between meme and not meme.

Baseline and Evaluation

The task is evaluated with F1, precision and recall scores. The baseline is given by the performance of a random classifier, which labels 50% of images as meme.

Task 2: Hate Speech Identification

Hate speech became a relevant issue for social media platforms. Even though the automatic classification of posts may lead to censorship of non-offensive content (Gillespie, 2018), the use of machine learning techniques became more and more crucial, since manual filtering is a very consuming task for the annotators (Zampieri et al., 2019a).

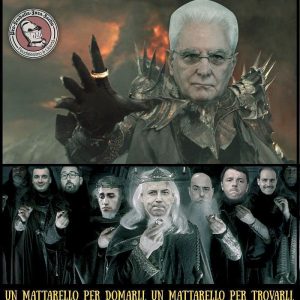

In this direction, SemEval2020 proposed “Memotion Analysis” among its tasks, to classify sarcastic, humorous, and offensive memes. This kind of analysis assumes a specific relevance when applied to political content. Memes about political topics are a powerful tool of political criticism (Plevriti, 2014). For these reasons, the proposed task aims at detecting memes with offensive content. Following Zampieri et al. (2019b) definition, an offensive meme contains any form of profanity or a targeted offense, veiled or direct, such as insults, threats, profane language or swear words. Recent studies have shown that multimodal analysis is crucial in such a task (Sabat et al., 2019).

The dataset will consist only of memes: for each, we will provide as input the image, the text transcription, and the image vector representation. Participants’ goal is to associate a binary feature to each meme, with value 1 for offensive content, and 0 otherwise.

Baseline and Evaluation

The baseline is given by the performance of a classifier labeling a meme as offensive when the meme text contains at least a swear word. The task is evaluated with F1, precision and recall scores.

Task 3: Event Clustering

Social media react to the real world, by commenting in real-time to mediatised events in a way that disrupts traditional usage patterns (Al Nashmi, 2018). The ability to understand which events are represented and how, then, becomes relevant in the context of an hyper-productive internet. Following Giorgi & Rama (2019, forthcoming), most of the memes of our dataset can be pinpointed to 4 main events, that are:

- the beginning of the government crisis;

- the beginning of consultations involving political parties and Conte’s Senate speech;

- Giuseppe Conte is called by the President to form a new government;

- the 5SM holds a vote on his direct democracy platform, Rousseau.

The aim of this task is to cluster the memes into these given categories, along with a residual category for memes that do not fit any of the four given.

The aim of this task is to cluster the memes into these given categories, along with a residual category for memes that do not fit any of the four given.

Participants’ goal is to apply supervised or unsupervised techniques to cluster the memes so that memes pinpointing to the same events are put in the same cluster. Given that, participants are required to specify the algorithm type adopted to approach the task. In a first mandatory run (“labelled run”), all participants must associate each item of the dataset to one of the events provided in the training set. Participants approaching the task with clustering can submit an additional run of the system in which they can group items in an arbitrary number of clusters (“unlabelled run”). This means that for this task, there will be two different rankings.

Baseline and Evaluation

In the labelled run, the models will be ranked via F1-score. In this setting, the baseline is given by the performance of a classifier labeling every meme as belonging to the most numerous class. A separate ranking will be performed for clustering systems participating in the unlabelled run, based on the silhouette coefficient. In this case, the baseline is given by the performance of a model that organizes the memes solely on the basis of their publication date.